Jump back to chapter selection.

Table of Contents

1.1 Continuous-Time and Discrete-Time Signals

1.2 Energy and Power

1.3 Transformations

1.4 Periodic Signals

1.5 Even and Odd Signals

1.6 Exponential Signals

1.7 Unit Impulse and Unit Step

1.8 Interconnection of Systems

1.9 Basic Properties

1 Signals and Systems

1.1 Continuous-Time and Discrete-Time Signals

Signals are represented mathematically as functions of one or more independent variables. Here, our attention is focused on signals involving a single independent variable, which we will usually denote as time

For continuous-time signals, we will enclose the independent variable in parentheses, for instance

Alternatively, a very important class of discrete-time signals arises from the sampling of continuous-time signals. In this case, the discrete-time signal

1.2 Energy and Power

In many applications, the signals considered are directly related to physical quantities such as power and energy in a physical system. As a starting example, consider a voltage

and the average power over this interval as:

With these simple physical examples in mind, it is conventional to use similar terminology for power and energy for any continuous-time signal

The total energy of a signal over a finite time interval

The time-averaged power over this interval is:

For signals considered over an infinite time interval (

Note that for some signals (for instance, a non-zero constant signal), this integral or sum might not converge, meaning such signals have infinite total energy. The time-averaged power over an infinite interval is then defined as:

These definitions allow us to identify three important classes of signals:

- Finite total energy signals (Energy signals): These have

. For such signals, it follows that . - Finite average power signals (Power signals): These have

. For such signals, it follows that . Periodic signals are a common example. - Signals with neither finite total energy nor finite average power, such as

.

1.3 Transformations

A central concept in signal and system analysis is the transformation of an independent variable (usually time) of a signal. Such transformations are fundamental to understanding how signals are modified or how different signals relate to one another. Examples of systems performing signal transformations are abundant, including audio equalisers that modify the spectrum of a music signal or medical imaging systems that reconstruct an image from sensor data.

Some important and very fundamental transformations of the time variable are:

- Time shift:

. This shifts the signal along the time axis by . If , the signal is delayed; if , the signal is advanced. - Time reversal (or reflection):

. This reflects the signal about the time origin . - Time scaling:

. If , the signal is compressed in time. If , the signal is expanded in time. If , it also involves time reversal.

1.4 Periodic Signals

A very important class of signals encountered frequently is periodic signals. A continuous-time signal

In other words, a periodic signal is unchanged by a time shift of

1.5 Even and Odd Signals

Another set of useful properties of signals relates to their symmetry under time reversal. A signal

A signal is considered odd if it is the negative of its time-reversed counterpart:

An odd signal must be zero at time zero (if defined at

Any signal can be uniquely decomposed into a sum of an even part and an odd part:

where the even part

(Analogous definitions apply for discrete-time signals

1.6 Exponential Signals

Consider the continuous-time complex exponential signal of the form

- Real exponential signals:

and are real numbers. - If

, represents an exponential decay. - If

, represents an exponential growth. - If

, is a constant (DC signal).

- If

- Periodic complex exponential signals (Purely imaginary

): Let , where is real. Then . This signal is periodic with fundamental period (if ). If , it is a DC signal, which is periodic with any period . Like other non-zero periodic signals, these have infinite total energy but finite average power (specifically, ). - General complex exponential signals: Let

and . Then . - If

: is purely sinusoidal (as in point 2). - If

: is a sinusoidal signal multiplied by an exponentially increasing envelope. - If

: is a sinusoidal signal multiplied by an exponentially decaying envelope.

- If

Many of the concepts discussed from section 1.3 to section 1.6 have direct analogues for discrete-time signals. However, a key difference arises in the periodicity of discrete-time complex exponentials: A discrete-time complex exponential

1.7 Unit Impulse and Unit Step

In this section, several other basic signals of considerable importance in signal and system analysis are introduced.

Consider the discrete-time unit impulse (or unit sample),

And the discrete-time unit step,

There is a close relationship between the unit impulse and the unit step in discrete time. In particular, the unit impulse is the first difference of the unit step:

Conversely, the unit step is the running sum of the unit impulse:

An alternative form for the running sum (by change of variable

A key property of the discrete-time unit impulse is the sifting property:

Summing over

For continuous-time signals, the continuous-time unit impulse (or Dirac delta function)

(This implies

Conversely, the unit impulse is the derivative of the unit step:

The sifting property for the continuous-time impulse is

The unit impulse should be considered an idealisation of a pulse that is infinitely short in duration, has unit area, and is infinitely tall. Any real physical system possesses some "inertia" or finite response time. The response of such a system to an input pulse that is sufficiently short (compared to the system's response time) is often independent of the exact pulse duration or shape, and depends primarily on its integrated effect (its area). For a system with a faster response, the input pulse must be shorter for this approximation to hold. The ideal unit impulse is considered short enough to probe the response of any linear time-invariant system.

1.8 Interconnection of Systems

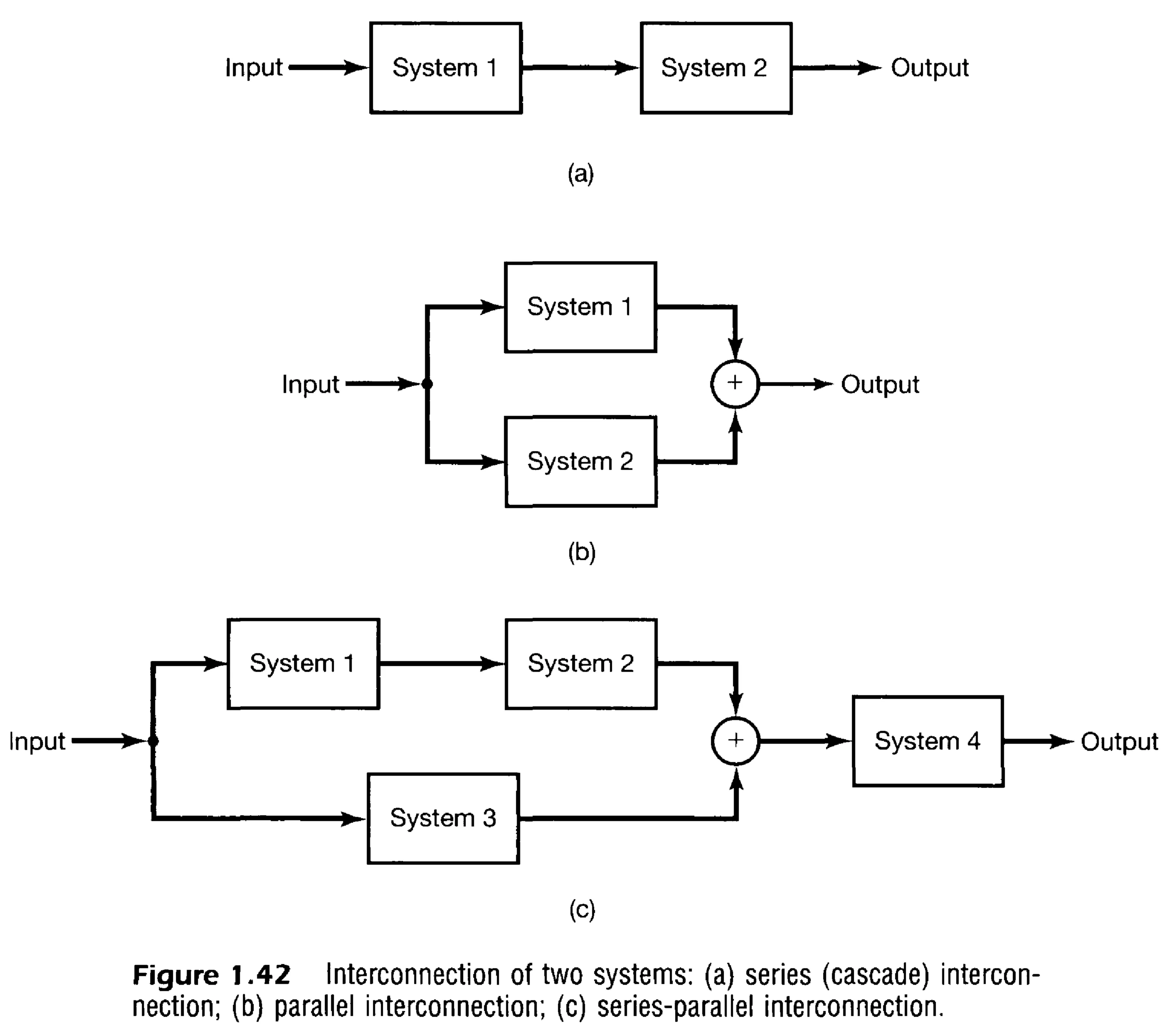

An important concept in systems analysis is the interconnection of systems, since many real-world systems are constructed as interconnections of several simpler subsystems. By decomposing a complex system into an interconnection of simpler subsystems, it may be possible to analyse or synthesise it using basic building blocks. The most frequently encountered connections are the series (or cascade) and parallel types:

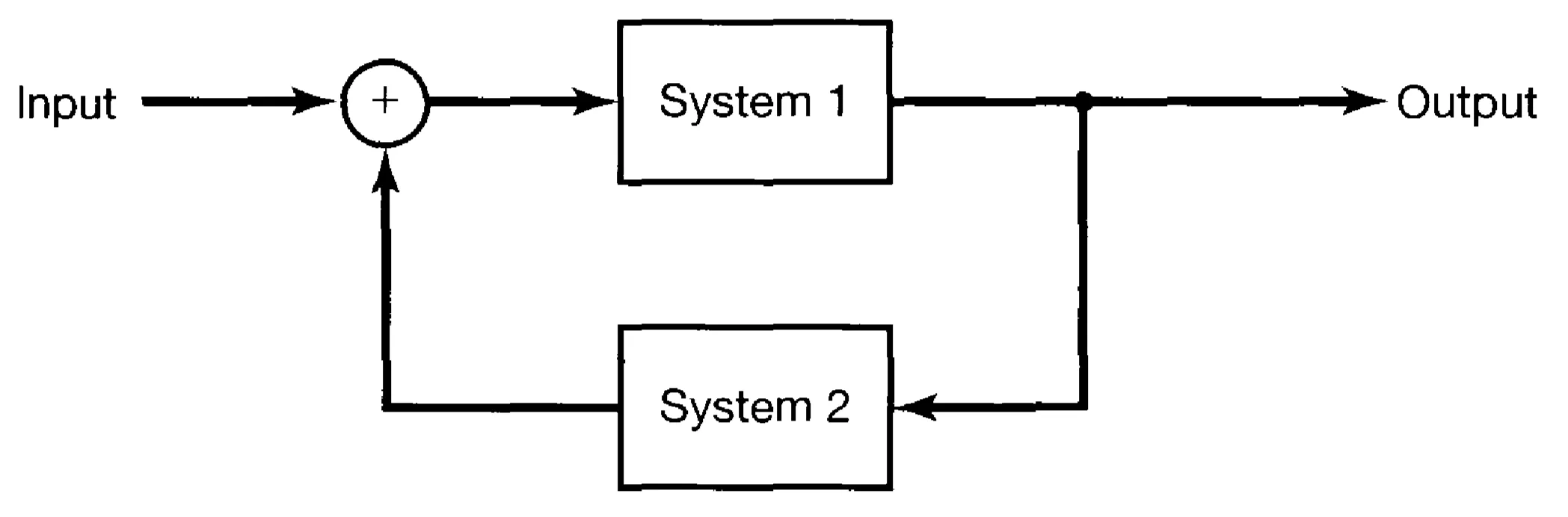

The symbol

In this (negative) feedback configuration, the output of system 1 is the input to system 2. The output of system 2 is then fed back and subtracted from (or added to, for positive feedback) the external input to produce the actual input signal that drives system 1. These types of interconnections are prevalent in many practical systems, for instance, in control systems and amplifiers. Block diagram equivalences, such as shown below, are often useful for simplifying or analysing interconnected systems.

1.9 Basic Properties

1.9.1 Memory

A system is said to be memoryless if its output at any given time depends only on the input at that same time. An example of a basic memoryless system is the identity system,

Systems that are not memoryless are said to possess memory. Their output depends on past (and for non-causal systems, possibly future) values of the input. As counterexamples:

- The accumulator system

has memory, as the output at time depends on all past and present inputs. - The delay system

also has memory, as the output at time depends on the input at time .

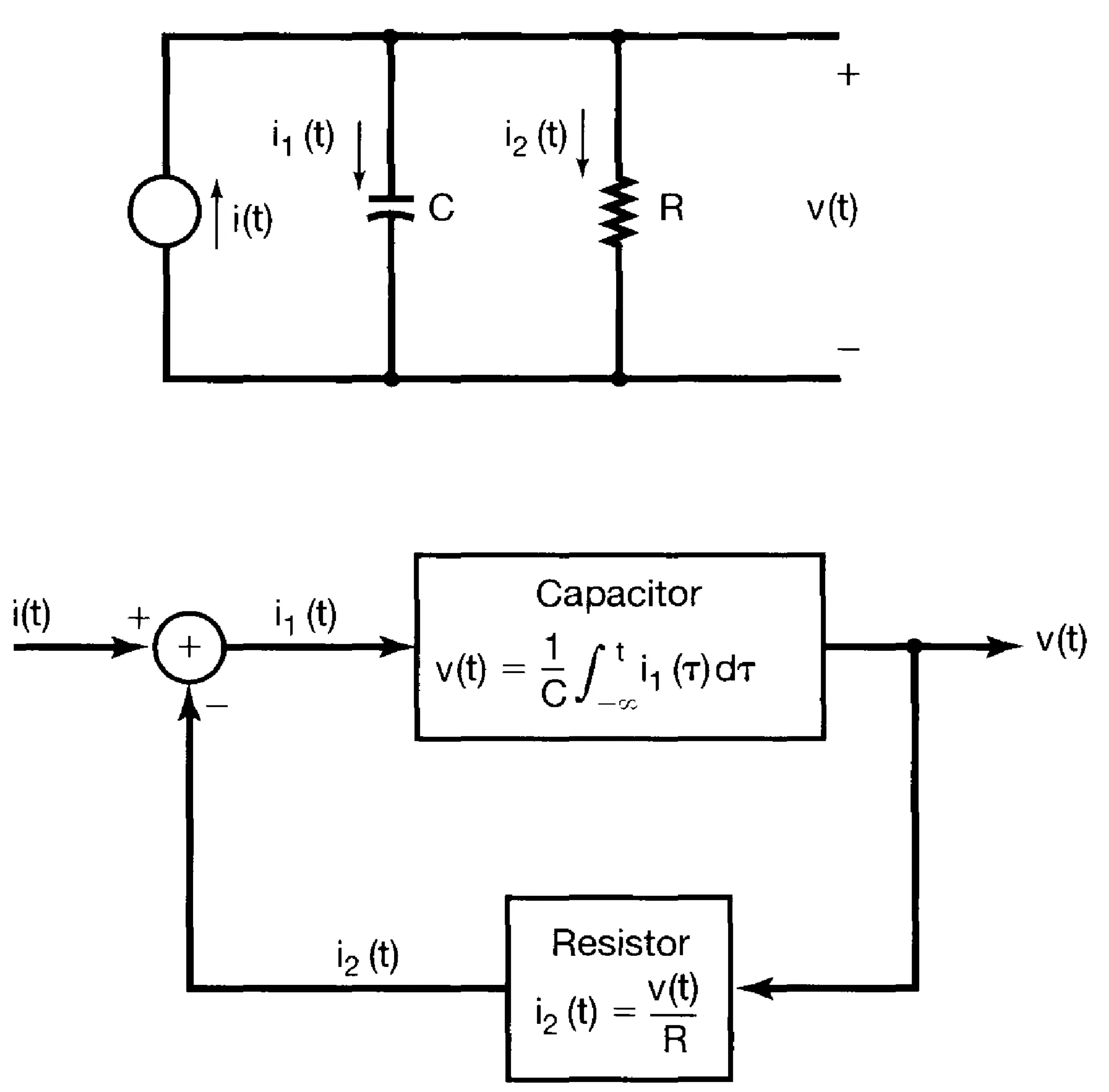

A capacitor is an example of a physical system component with memory, since the voltage across it is, depending on the history of the current .

1.9.2 Invertibility and Inverse Systems

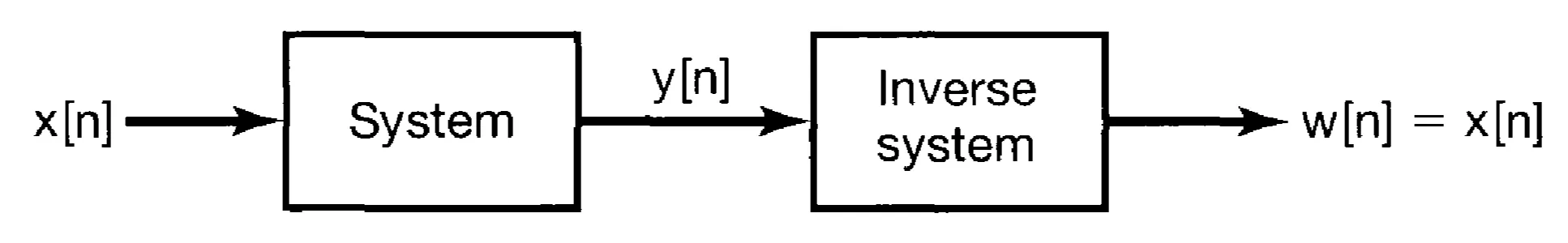

A system is said to be invertible if distinct inputs produce distinct outputs. If a system is invertible, then an inverse system exists which, when cascaded with the original system, yields an output equal to the original system's input. That is, if system

Invertibility is important in many contexts, such as signal processing (for instance, deconvolution to remove distortions) and communication systems (for instance, decoding an encoded signal). Lossless data compression, for example, requires that the encoding process must be invertible to allow perfect reconstruction of the original data.

1.9.3 Causality

A system is causal if its output at any time

Examples of non-causal systems include:

1.9.4 Stability

A system is stable in the Bounded-Input, Bounded-Output (BIBO) sense if every bounded input signal produces an output signal that is also bounded. That is, if an input

For instance, consider a simple pendulum with small oscillations (stable system) versus an inverted pendulum (unstable system): a small perturbation (input) to the inverted pendulum can lead to a large, unbounded output (falling over).

1.9.5 Time Invariance

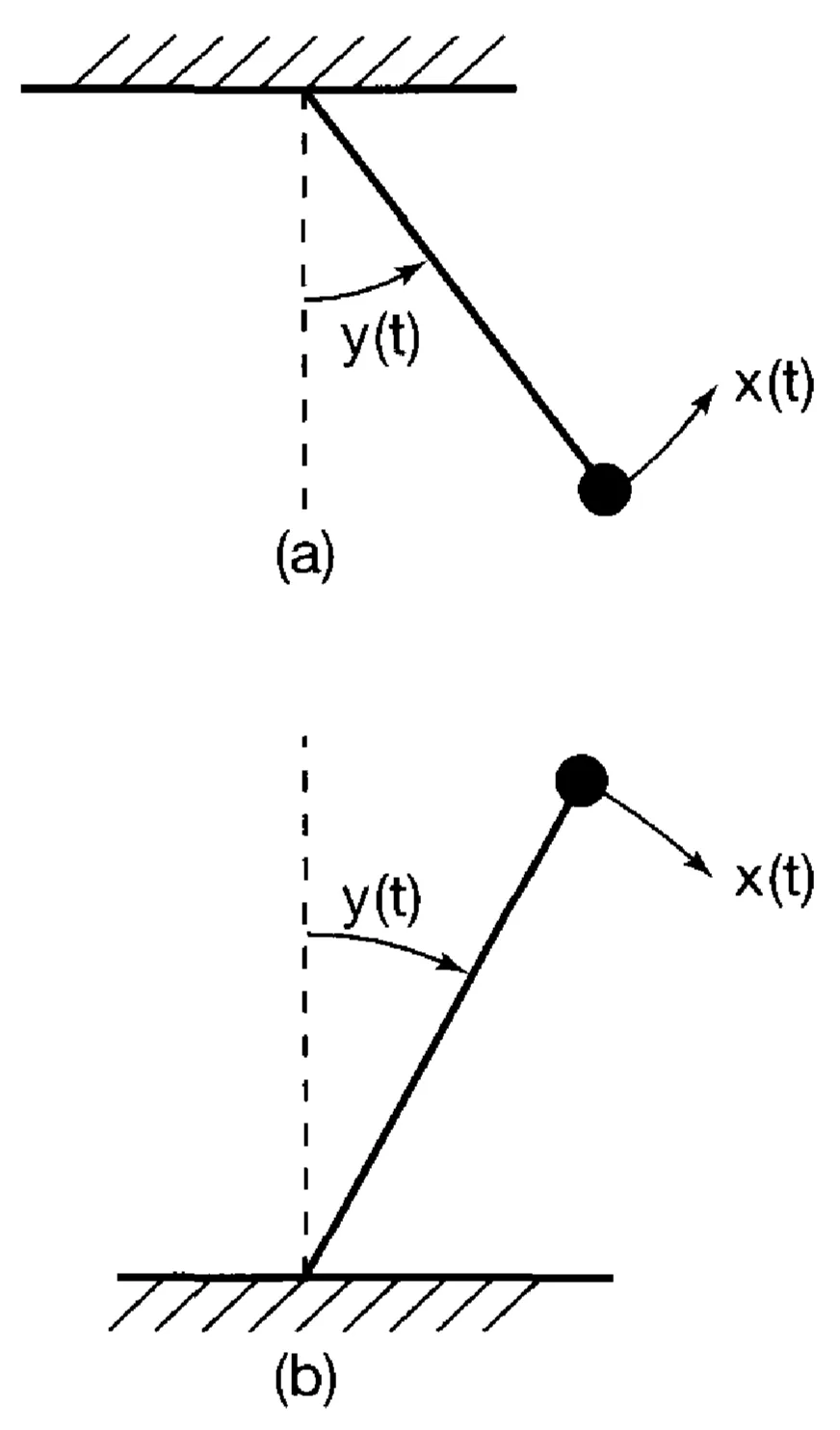

A system is time-invariant if its behaviour and characteristics are fixed over time. Formally, a system is time-invariant if a time shift in the input signal causes an identical time shift in the output signal. That is, if an input

1.9.6 Linearity

A system is linear if it satisfies the superposition principle. This principle combines two properties:

- Additivity: If input

produces output , and input produces output , then the input must produce the output . - Homogeneity (or Scaling): If input

produces output , then for any complex constant , the input must produce the output .

These two properties can be combined into a single condition for superposition: for any inputs

Analogous definitions apply for discrete-time systems.